Data Factory: Fully managed service to build and manage information production pipelines

Organizations are increasingly looking to fully leverage all of the data available to their business. As they do so, the data processing landscape is becoming more diverse than ever before – data is being processed across geographic locations, on-premises and cloud, across a wide variety of data types and sources (SQL, NoSQL, Hadoop, etc), and the volume of data needing to be processed is increasing exponentially. Developers today are often left writing large amounts of custom logic to deliver an information production system that can manage and co-ordinate all of this data and processing work.

To help make this process simpler, I’m excited to announce the preview of our new Azure Data Factory service – a fully managed service that makes it easy to compose data storage, processing, and data movement services into streamlined, scalable & reliable data production pipelines. Once a pipeline is deployed, Data Factory enables easy monitoring and management of it, greatly reducing operational costs.

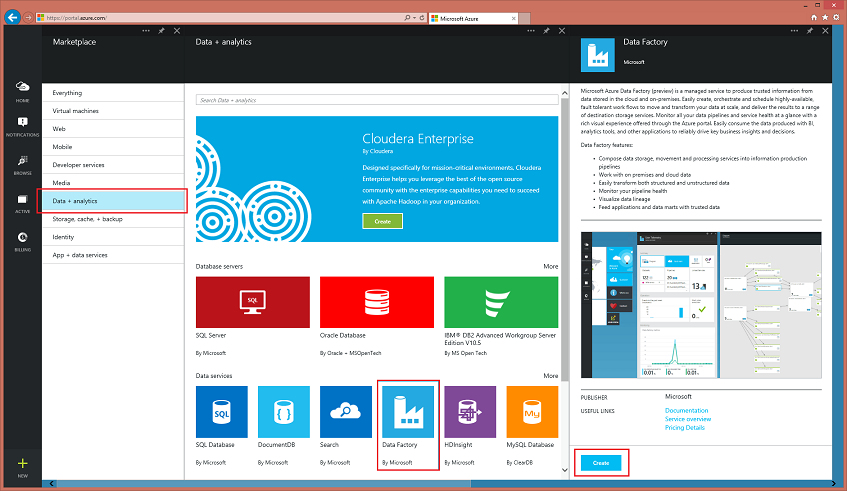

Easy to Get Started

The Azure Data Factory is a fully managed service. Getting started with Data Factory is simple. With a few clicks in theAzure preview portal, or via our command line operations, a developer can create a new data factory and link it to data and processing resources. From the new Azure Marketplace in the Azure Preview Portal, choose Data + Analytics –> Data Factory to create a new instance in Azure:

Orchestrating Information Production Pipelines across multiple data sources

Data Factory makes it easy to coordinate and manage data sources from a variety of locations – including ones both in the cloud and on-premises. Support for working with data on-premises inside SQL Server, as well as Azure Blob, Tables, HDInsight Hadoop systems and SQL Databases is included in this week’s preview release.

Access to on-premises data is supported through a data management gateway that allows for easy configuration and management of secure connections to your on-premises SQL Servers. Data Factory balances the scale & agility provided by the cloud, Hadoop and non-relational platforms, with the management & monitoring that enterprise systems require to enable information production in a hybrid environment.

Custom Data Processing Activities using Hive, Pig and C#

This week’s preview enables data processing using Hive, Pig and custom C# code activities. Data Factory activities can be used to clean data, anonymize/mask critical data fields, and transform the data in a wide variety of complex ways.

The Hive and Pig activities can be run on an HDInsight cluster you create, or alternatively you can allow Data Factory to fully manage the Hadoop cluster lifecycle on your behalf. Simply author your activities, combine them into a pipeline, set an execution schedule and you’re done – no manual Hadoop cluster setup or management required.

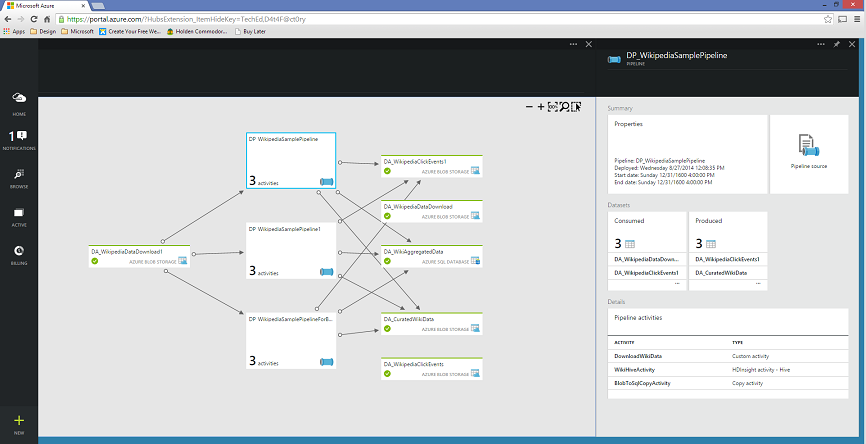

Built-in Information Production Monitoring and Dashboarding

Data Factory also offers an up-to-the moment monitoring dashboard, which means you can deploy your data pipelines and immediately begin to view them as part of your monitoring dashboard. Once you have created and deployed pipelines to your Data Factory you can quickly assess end-to-end data pipeline health, pinpoint issues, and take corrective action as needed.

Within the Azure Preview Portal, you get a visual layout of all of your pipelines and data inputs and outputs. You can see all the relationships and dependencies of your data pipelines across all of your sources so you always know where data is coming from and where it is going at a glance. We also provide you with a historical accounting of job execution, data production status, and system health in a single monitoring dashboard: