Tuesday, August 23, 2016

Tuesday, August 9, 2016

Azure Cloud Services Vs Web App For Deployment

Azure Web Sites enables you to build highly scalable web sites quickly on Azure. You can use the Azure Portal or the command-line tools to set up a web site with popular languages such as .NET, PHP, Node.js, and Python. Supported frameworks are already deployed and do not require more installation steps. The Azure Web Sites gallery contains many third-party applications, such as Drupal and WordPress as well as development frameworks such as Django and CakePHP. After creating a site, you can either migrate an existing web site or build a completely new web site. Web Sites eliminates the need to manage the physical hardware, and it also provides several scaling options. You can move from a shared multi-tenant model to a standard mode where dedicated machines service incoming traffic. Web Sites also enable you to integrate with other Azure services, such as SQL Database, Service Bus, and Storage. Using the Azure WebJobs SDK preview, you can add background processing. In summary, Azure Web Sites make it easier to focus on application development by supporting a wide range of languages, open source applications, and deployment methodologies (FTP, Git, Web Deploy, or TFS). If you don’t have specialized requirements that require Cloud Services or Virtual Machines, an Azure Web Site is most likely the best choice.

Cloud Services enable you to create highly-available, scalable web applications in a rich Platform as a Service (PaaS) environment. Unlike Web Sites, a cloud service is created first in a development environment, such as Visual Studio, before being deployed to Azure. Frameworks, such as PHP, require custom deployment steps or tasks that install the framework on role startup. The main advantage of Cloud Services is the ability to support more complex multitier architectures. A single cloud service could consist of a frontend web role and one or more worker roles. Each tier can be scaled independently. There is also an increased level of control over your web application infrastructure. For example, you can remote desktop onto the machines that are running the role instances. You can also script more advanced IIS and machine configuration changes that run at role startup, including tasks that require administrator control.

Thursday, July 28, 2016

Understanding Stream Analytics

What is Stream Analytics?

Stream Analytics is an event processing engine that can ingest events in real-time, whether from one data stream or multiple streams. Events can come from sensors, applications, devices, operational systems, websites, and a variety of other sources. Just about anything that can generate event data is fair game.

Stream Analytics provides high-throughput, low-latency processing, while supporting real-time stream computation operations. With a Stream Analytics solution, organizations can gain immediate insights into real-time data as well as detect anomalies in the data, set up alerts to be triggered under specific conditions, and make the data available to other applications and services for presentation or further analysis. Stream Analytics can also incorporate historical or reference data into the real-time streams to further enrich the information and derive better analytics.

Stream Analytics is built on a pull-based communication model that utilizes adaptive caching with configured size limits and timeouts. The service also adheres to a client-anchor model that provides built-in recovery and check-pointing capabilities. In addition, the service can persist data to protect against node or downstream failure.

To implement a streaming pipeline, developers create one or more jobs that define a stream’s inputs and outputs. The jobs also incorporate SQL-like queries that determine how the data should be transformed. In addition, developers can adjust a number of a job’s settings. For example, they can control when the job should start producing result output, how to handle events that do not arrive sequentially, and what to do when a partition lags behind others or does not contain data. Once a job is implemented, administrators can view the job’s status via the Azure portal.

Stream Analytics supports two input types, stream data and reference data, and two source types, Azure Event Hubs and Azure Blob storage. Event Hubs is a publish-subscribe data integration service that can consume large volumes of events from a wide range of sources. Blob storage is a data service for storing and retrieving binary large object (BLOB) files. The following table shows the types of data that Stream Analytics can handle and the supported sources and input formats for each.

Input type

|

Supported Sources

|

Supported Formats

|

Size Limits

|

Stream

|

Event Hubs

Blob storage

|

JSON

CSV

Avro

|

N/A

|

Reference

|

Blob storage

|

JSON

CSV

|

50 MB

|

A Stream Analytics job must include at least one stream input type. If Blob storage is used, the file must contain all events before they can be streamed to Stream Analytics. The file is also limited to a maximum size of 50 MB. In this sense, the stream is historical in nature, no matter how recently the file was created. Only Event Hubs can deliver real-time event streams.

Reference data is optional in a Stream Analytics job and can come only from Blob storage. Reference data can be useful for performing lookups or correlating data in multiple streams.

Once a job has the input it needs, the data can be transformed. To facilitate these transformations, Stream Analytics supports a declarative SQL-like language. The language includes a range of specialized functions and operators that let developers implement everything from simple filters to complex aggregations across correlated streams. The language’s SQL-like nature makes it relatively easy for developers to transform data without having to dig into the technical complexities of the underlying system.

The last piece of the job puzzle is the stream output. Stream Analytics can write the query results (the transformed data) to Azure SQL Database or Blob storage. SQL Database can be useful if the data is relational in nature or supports applications that require database hosting. Blob storage is a good choice for long-term archiving or later processing. A Stream Analytics job can also send data back to Event Hubs to support other streaming pipelines and applications.

According to Microsoft, Stream Analytics can scale to any volume of data, while still achieving high throughput and low latency. An organization can start with a system that supports only a few kilobytes of data per second and scale up to gigabytes per second as needed. Stream Analytics can also leverage the partitioning capabilities of Event Hubs. In addition, administrators can specify how much compute power to dedicate to each step of the pipeline in order to achieve the most efficient and cost-effective throughput.

The Azure real-time analytics stack

Stream Analytics was designed to work in conjunction with other Azure services. Data inputted into and outputted from a Stream Analytics job must come and go through those Azure services. The following diagram provides a conceptual overview of how the Azure layers fit together and data flows through those layers in order to provide a complete stream analytics solution.

The top layer shown in the figure represents the starting point. These are the data sources that generate the event data. The data can come from just about anywhere, whether a piece of equipment, mobile device, cloud service, ATM, aircraft, oil platform-any device, sensor, or operation that can transmit event data. The data source might connect directly to Event Hubs or Blob storage or go through a gateway that connects to Azure.

Event Hubs can ingest and integrate millions of events per second. The events can be in various formats and stream in at different velocities. Event Hubs persists the events for a configurable period of time, allowing the events to support multiple Stream Analytics jobs or other operations. Blob storage can also store event data and make it available to Stream Analytics for operations that rely on historical data. In addition, Blob storage can provide reference data to support operations such as correlating multiple event streams.

The next layer in the Azure stack is where the actual analytics occur. Stream Analytics provides built-in integration with Event Hubs to support seamless, real-time analytics and with Blob storage to facilitate access to historical event data and reference data.

In addition to Stream Analytics, Azure provides Machine Learning, a predictive analytics service for mining data and identifying trends and patterns across large data sets. After analyzing the data, Machine Learning can publish a model that can then be used to generate real-time predictions based on incoming event data in Stream Analytics.

Also at the analytics layer is HDInsight Storm, an engine similar to Stream Analytics. However, unlike Stream Analytics, Storm runs on dedicated HDInsight clusters and supports a more diversified set of languages. Stream Analytics provides a built-in, multi-tenant environment and supports only the SQL language. In general, Stream Analytics is more limited in scope but makes it easier for an organization to get started. Storm can ingest data from more services and is more expansive in scope, but requires more effort. This, of course, is just a basic overview of the differences between the two services, so be sure to check Microsoft resources for more information.

From the analytics layer, we move to what is primarily the storage layer, where data can be persisted for presentation or made available for further consumption. As noted earlier, Stream Analytics can send data to SQL Database or Blob storage. SQL Database is a managed database as a service (DBaaS) and can be a good choice when you require an interactive query response from the transformed data sets.

Stream Analytics can also persist data to Blob storage. From there, the data can again be processed as a series of events, used as part of an HDInsight solution, or made available to large-scale analytic operations such as machine learning. In addition, Stream Analytics can write data back to Event Hubs for consumption by other applications or services or to support additional Stream Analytics jobs.

The final layer in the analytics stack is presentation and consumption, which can include any number of tools and services. For example, Power Query in Excel includes a built-in native connection for accessing data directly from Blob storage, providing a self-service, in-memory environment for working with the processed event data. Another option is Power BI, which offers a rich set of self-service visualizations. With Power BI, users can interact directly with the processed event data in SQL Database. In addition, a wide range of applications and services can consume data from Blob storage, SQL Database, or Event Hubs, providing almost unlimited options for presenting the processed event data or consuming it for further analysis.

Putting Stream Analytics to Work

Stream Analytics, in conjunction with Event Hubs, provides the structure necessary to perform real-time stream analytics on large sets of data. It is not meant to replace batch-oriented services, but rather to offer a way to handle the growing influx of event data resulting from the expected IoT onslaught. Most organizations will still have a need for traditional transactional databases and data warehouses for some time to come.

Within the world of real-time analytics, the potential uses for services such as Stream Analytics are plenty. The following table provides an overview of only some of the possibilities.

Usage

|

Description

|

Examples

|

Connected devices

|

Monitor and diagnose real-time data from connected devices such as vehicles, buildings, or machinery in order to generate alerts, respond to events, or optimize operations.

|

Plan and schedule maintenance; coordinate vehicle usage and respond to changing traffic conditions; scale or repair systems.

|

Business operations

|

Analyze real-time data to respond to dynamic environments in order to take immediate action.

|

Provide stock trade analytics and alerts; recalculate pricing based on changing trends; adjust inventory levels.

|

Fraud detection

|

Monitor financial transactions in real-time to detect fraudulent activity.

|

Correlate a credit card’s use across geographic locations; monitor the number of transactions on a single credit card.

|

Website analytics

|

Collect real-time metrics to gain immediate insight into a website’s usage patters or application performance.

|

Perform clickstream analytics; test site layout and application features; determine an ad campaign’s impact; respond to degraded customer experience.

|

Customer dashboards

|

Provide real-time dashboards to customers so they can discover trends as they occur and be notified of events relevant to their operations.

|

Respond to a service, website, or application going down; view current user activity; view analytics based on data collected from devices or operations.

|

These scenarios are, of course, only a sampling of the ways an organization can reap the benefits of stream-processing analytics. Services such as Stream Analytics can also translate into significant savings, depending on the organization and type of implementation.

Currently, Microsoft prices Stream Analytics by the volume of processed data and the number of stream units used to process the data, at a per-hour rate. A stream unit is a compute capacity (CPU, memory, throughput), with a maximum throughput of 1 MB/s. Stream Analytics imposes a default quota of 12 streaming units per region, but requires no start-up or termination fees. Customers pay only for what they use, based on the following pricing structure:

- Data volume: $0.001/GB

- Streaming unit: $0.031/hour

Wednesday, June 15, 2016

Understanding Azure Data Factory

Data Factory: Fully managed service to build and manage information production pipelines

Organizations are increasingly looking to fully leverage all of the data available to their business. As they do so, the data processing landscape is becoming more diverse than ever before – data is being processed across geographic locations, on-premises and cloud, across a wide variety of data types and sources (SQL, NoSQL, Hadoop, etc), and the volume of data needing to be processed is increasing exponentially. Developers today are often left writing large amounts of custom logic to deliver an information production system that can manage and co-ordinate all of this data and processing work.

To help make this process simpler, I’m excited to announce the preview of our new Azure Data Factory service – a fully managed service that makes it easy to compose data storage, processing, and data movement services into streamlined, scalable & reliable data production pipelines. Once a pipeline is deployed, Data Factory enables easy monitoring and management of it, greatly reducing operational costs.

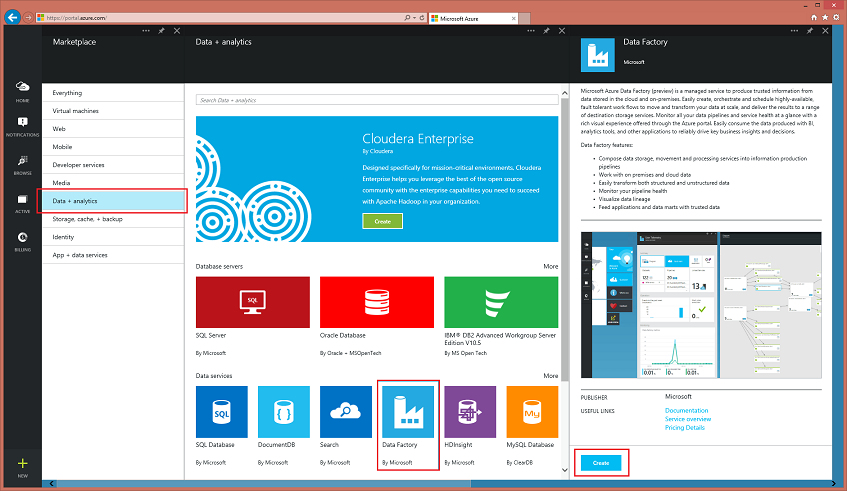

Easy to Get Started

The Azure Data Factory is a fully managed service. Getting started with Data Factory is simple. With a few clicks in theAzure preview portal, or via our command line operations, a developer can create a new data factory and link it to data and processing resources. From the new Azure Marketplace in the Azure Preview Portal, choose Data + Analytics –> Data Factory to create a new instance in Azure:

Orchestrating Information Production Pipelines across multiple data sources

Data Factory makes it easy to coordinate and manage data sources from a variety of locations – including ones both in the cloud and on-premises. Support for working with data on-premises inside SQL Server, as well as Azure Blob, Tables, HDInsight Hadoop systems and SQL Databases is included in this week’s preview release.

Access to on-premises data is supported through a data management gateway that allows for easy configuration and management of secure connections to your on-premises SQL Servers. Data Factory balances the scale & agility provided by the cloud, Hadoop and non-relational platforms, with the management & monitoring that enterprise systems require to enable information production in a hybrid environment.

Custom Data Processing Activities using Hive, Pig and C#

This week’s preview enables data processing using Hive, Pig and custom C# code activities. Data Factory activities can be used to clean data, anonymize/mask critical data fields, and transform the data in a wide variety of complex ways.

The Hive and Pig activities can be run on an HDInsight cluster you create, or alternatively you can allow Data Factory to fully manage the Hadoop cluster lifecycle on your behalf. Simply author your activities, combine them into a pipeline, set an execution schedule and you’re done – no manual Hadoop cluster setup or management required.

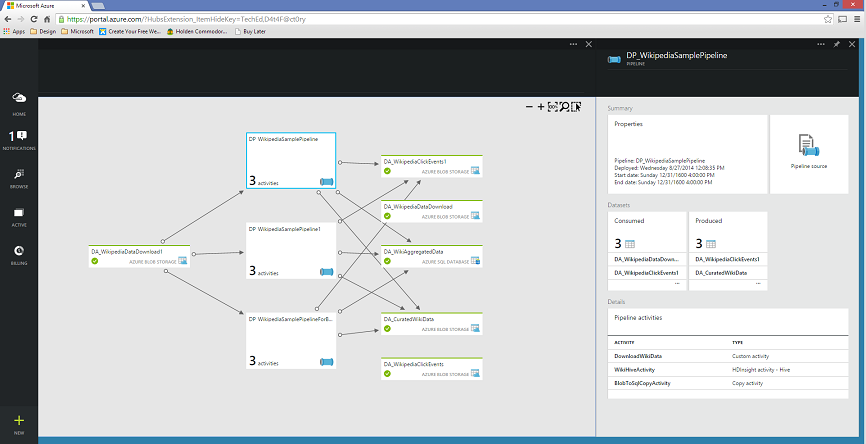

Built-in Information Production Monitoring and Dashboarding

Data Factory also offers an up-to-the moment monitoring dashboard, which means you can deploy your data pipelines and immediately begin to view them as part of your monitoring dashboard. Once you have created and deployed pipelines to your Data Factory you can quickly assess end-to-end data pipeline health, pinpoint issues, and take corrective action as needed.

Within the Azure Preview Portal, you get a visual layout of all of your pipelines and data inputs and outputs. You can see all the relationships and dependencies of your data pipelines across all of your sources so you always know where data is coming from and where it is going at a glance. We also provide you with a historical accounting of job execution, data production status, and system health in a single monitoring dashboard:

Wednesday, May 11, 2016

SSIS dataware house to load data into sql azure datawarehouse

Step 1: Create a new Integration Services project

- Launch Visual Studio 2015.

- On the File menu, select New | Project.

- Navigate to the Installed | Templates | Business Intelligence | Integration Services project types.

- Select Integration Services Project. Provide values for Name and Location, and then selectOK.

Visual Studio opens and creates a new Integration Services (SSIS) project. Then Visual Studio opens the designer for the single new SSIS package (Package.dtsx) in the project. You see the following screen areas:

- On the left, the Toolbox of SSIS components.

- In the middle, the design surface, with multiple tabs. You typically use at least the Control Flow and the Data Flow tabs.

- On the right, the Solution Explorer and the Properties panes.

Step 2: Create the basic data flow

- Drag a Data Flow Task from the Toolbox to the center of the design surface (on theControl Flow tab).

- Double-click the Data Flow Task to switch to the Data Flow tab.

- From the Other Sources list in the Toolbox, drag an ADO.NET Source to the design surface. With the source adapter still selected, change its name to SQL Server source in theProperties pane.

- From the Other Destinations list in the Toolbox, drag an ADO.NET Destination to the design surface under the ADO.NET Source. With the destination adapter still selected, change its name to SQL DW destination in the Properties pane.

Step 3: Configure the source adapter

- Double-click the source adapter to open the ADO.NET Source Editor.

- On the Connection Manager tab of the ADO.NET Source Editor, click the New button next to the ADO.NET connection manager list to open the Configure ADO.NET Connection Manager dialog box and create connection settings for the SQL Server database from which this tutorial loads data.

- In the Configure ADO.NET Connection Manager dialog box, click the New button to open the Connection Manager dialog box and create a new data connection.

- In the Connection Manager dialog box, do the following things.

- For Provider, select the SqlClient Data Provider.

- For Server name, enter the SQL Server name.

- In the Log on to the server section, select or enter authentication information.

- In the Connect to a database section, select the AdventureWorks sample database.

- Click Test Connection.

- In the dialog box that reports the results of the connection test, click OK to return to the Connection Manager dialog box.

- In the Connection Manager dialog box, click OK to return to the Configure ADO.NET Connection Manager dialog box.

- In the Configure ADO.NET Connection Manager dialog box, click OK to return to theADO.NET Source Editor.

- In the ADO.NET Source Editor, in the Name of the table or the view list, select theSales.SalesOrderDetail table.

- Click Preview to see the first 200 rows of data in the source table in the Preview Query Results dialog box.

- In the Preview Query Results dialog box, click Close to return to the ADO.NET Source Editor.

- In the ADO.NET Source Editor, click OK to finish configuring the data source.

Step 4: Connect the source adapter to the destination adapter

- Select the source adapter on the design surface.

- Select the blue arrow that extends from the source adapter and drag it to the destination editor until it snaps into place.

In a typical SSIS package, you use a number of other components from the SSIS Toolbox in between the source and the destination to restructure, transform, and cleanse your data as it passes through the SSIS data flow. To keep this example as simple as possible, we’re connecting the source directly to the destination.

In a typical SSIS package, you use a number of other components from the SSIS Toolbox in between the source and the destination to restructure, transform, and cleanse your data as it passes through the SSIS data flow. To keep this example as simple as possible, we’re connecting the source directly to the destination.

Step 5: Configure the destination adapter

- Double-click the destination adapter to open the ADO.NET Destination Editor.

- On the Connection Manager tab of the ADO.NET Destination Editor, click the New button next to the Connection manager list to open the Configure ADO.NET Connection Manager dialog box and create connection settings for the Azure SQL Data Warehouse database into which this tutorial loads data.

- In the Configure ADO.NET Connection Manager dialog box, click the New button to open the Connection Manager dialog box and create a new data connection.

- In the Connection Manager dialog box, do the following things.

- For Provider, select the SqlClient Data Provider.

- For Server name, enter the SQL Data Warehouse name.

- In the Log on to the server section, select Use SQL Server authentication and enter authentication information.

- In the Connect to a database section, select an existing SQL Data Warehouse database.

- Click Test Connection.

- In the dialog box that reports the results of the connection test, click OK to return to the Connection Manager dialog box.

- In the Connection Manager dialog box, click OK to return to the Configure ADO.NET Connection Manager dialog box.

- In the Configure ADO.NET Connection Manager dialog box, click OK to return to theADO.NET Destination Editor.

- In the ADO.NET Destination Editor, click New next to the Use a table or view list to open the Create Table dialog box to create a new destination table with a column list that matches the source table.

- In the Create Table dialog box, do the following things.

- Change the name of the destination table to SalesOrderDetail.

- Remove the rowguid column. The uniqueidentifier data type is not supported in SQL Data Warehouse.

- Change the data type of the LineTotal column to money. The decimal data type is not supported in SQL Data Warehouse. For info about supported data types, see CREATE TABLE (Azure SQL Data Warehouse, Parallel Data Warehouse).

- Click OK to create the table and return to the ADO.NET Destination Editor.

- In the ADO.NET Destination Editor, select the Mappings tab to see how columns in the source are mapped to columns in the destination.

- Click OK to finish configuring the data source.

Step 6: Run the package to load the data

Run the package by clicking the Start button on the toolbar or by selecting one of the Runoptions on the Debug menu.

As the package begins to run, you see yellow spinning wheels to indicate activity as well as the number of rows processed so far.

When the package has finished running, you see green check marks to indicate success as well as the total number of rows of data loaded from the source to the destination.

Wednesday, April 20, 2016

MS SQL Azure with Power BI

Connecting through Power BI

Connect to the Azure SQL Database connector for Power BI.

- Select Get Data at the bottom of the left navigation pane.

- Within Databases, select Get.

- Select Azure SQL Database > Connect.

- Enter the necessary information to connect. The Finding Parameters section below shows where this data can be located in your Azure Portal.

Note: The username will be a user that is defined in your Azure SQL Database instance.

Note: The username will be a user that is defined in your Azure SQL Database instance. - After you've connected, you see a new dataset with the same name as the database you connected to, and a placeholder tile for the dataset, created on the dashboard.

- Selecting the placeholder tile for the dataset opens a new report, where you can explore all of the tables and columns in your database. Selecting a column will send a query back to the source, dynamically creating your visual. These visuals can be saved in a new report, and pinned back to your dashboard.

Finding Parameter Values

Your fully qualified server name and database name can be found in the Azure Portal.

Wednesday, April 6, 2016

Understanding Azure Data Lakes and Analytics

The data lake serves as an alternative to multiple information silos typical of enterprise environments. The data lake does not care where the data came from or how it was used. It is indifferent to data quality or integrity. It is concerned only with providing a common repository from which to perform in-depth analytics. Only then is any sort of structure imposed upon the data.

As the popularity of the data lake grows, so too does the number of vendors jumping into data lake waters, each bringing its own idea of what a data lake entails. While any data lake solution will have at its core a massive repository, some vendors also roll in an analytics component or two, which is exactly what Microsoft is planning to do. As the following figure shows, the Azure Data Lake platform comprises three primary services: Data Lake Store, Data Lake Analytics, and Azure HDInsight.

Data Lake Store provides the repository necessary to persist the influx of data, and Data Lake Analytics offers a mechanism for picking apart that data. Both components are now in public preview. Microsoft has also rolled HDInsight into the Data Lake mix, a service that offers a wide range of Hadoop-based tools for additional analytic capabilities. To facilitate access between the storage and analytic layers, the Data Lake platform leverages Apache YARN (Yet Another Resource Negotiator) and WebHDFS-compatible REST APIs.

Azure Data Lake Store

Microsoft describes Data Lake Store as a “hyper-scale repository for big data analytic workloads,” a mouthful, to be sure, but descriptive nonetheless. The service will let you store data of any size, type, or ingestion speed, whether originating from social networks, relational databases, web-based applications, line-of-business (LOB) applications, mobile and desktop devices, or a variety of other sources. The repository provides unlimited storage without restricting file sizes or data volumes. An individual file can be petabytes in size, with no limit on how long you keep it there.

Data Lake Store uses a Hadoop file system to support compatibility with the Hadoop Distributed File System (HDFS), making the data store accessible to a wide range of Hadoop-friendly tools and services. Data Lake Store is already integrated with Data Lake Analytics and HDInsight, as well as Azure Data Factory; however, Microsoft also plans eventual integration with services such as Microsoft’s Revolution-R Enterprise, distributions from Hortonworks, Cloudera, and MapR, and Hadoop projects such as Spark, Storm, and HBase.

To protect the data, Microsoft makes redundant copies for ensuring durability and promises enterprise-grade security, based on Azure Active Directory (AAD). The AAD service manages identity and access for all stored data, providing multifactor authentication, role-based access control, conditional access, and numerous other features.

To use AAD to protect data, you must first create AAD security groups to facilitate role-based access control in Azure Portal. Next, you must assign the security groups to the Data Lake Store account, which control access to the repository for portal and management operations. The next step is to assign the security group to the access control lists (ACLs) associated with the repository’s file system. Currently, you can assign access control permissions only at the repository level, but Microsoft is planning to add folder- and file-level controls in a future release.

Data Lake Store supports POSIX-style permissions exposed through the WebHDFS-compatible REST APIs. The WebHDFS protocol makes it possible to support all HDFS operations, not only read and write, but also such operations as accessing block locations and configuring replication factors. In addition, WebHDFS can use the full bandwidth of the Hadoop cluster for streaming data.

Data Lake Store also implements a new file system-AzureDataLakeFilesystem (adl://)-for directly accessing the repository. Applications and services capable of using the file system can realize additional flexibility and performance gains over WebHDFS. Systems not compatible with the new file system can continue to use the WebHDFS-compatible APIs.

Azure Data Lake Analytics

Data Lake Analytics represents one of Microsoft’s newest cloud offerings, appearing on the scene only within the last couple months. According to Microsoft, the company built Data Lake Analytics from the ground up with scalability and performance in mind. The service provides a distributed infrastructure that can dynamically allocate or de-allocate resources so customers pay for only the services they use.

As with similar cloud platforms, Data Lake Analytics users can focus on the business logic, rather than on the logistics of how to implement systems and process large data sets. The service handles all the complex management tasks so customers can develop and execute their solutions without worrying about deploying or maintaining the infrastructure to support them.

Data Lake Analytics is also integrated with AAD, making it easier to manage users and permissions. It is also integrated with Visual Studio, providing developers with a familiar environment for creating analytic solutions.

A solution built for the Data Lake Analytics service is made up of one or more jobs that define the business logic. A job can reference data within Data Lake Store or Azure Blob storage, impose a structure on that data, and process the data in various ways. When a job is submitted Data Lake Analytics, the service will access the source data, carry out the defined operations, and output the results to Data Lake Store or Blob storage.

Azure Data Lake provides several options for submitting jobs to Data Lake Analytics:

- Use Azure Data Lake Tools in Visual Studio to submit jobs directly.

- Use the Azure Portal to submit jobs via the Data Lake Analytics account.

- Use the Data Lake SDK job submission API to submit jobs programmatically.

- Use the job submission command available through the Azure PowerShell extensions to submit jobs programmatically.

The U-SQL difference

A job is actually a U-SQL script that instructs the service on how to process the data. U-SQL is a new language that Microsoft developed for writing scalable, distributed queries that analyze data. An important function of the language is its ability to process unstructured data by applying schema on read logic, which imposes a structure on the data as you retrieve it from its source. The U-SQL language also lets you insert custom logic and user-defined functions into your scripts, as well as provides fine-grained control over how to run a job at scale.

U-SQL evolved out of Microsoft’s internal big data language SCOPE (Structured Computations Optimized for Parallel Execution), a SQL-like language that supports set-oriented record and column manipulation. U-SQL is a type of hybrid language that combines the declarative capabilities of SQL with the extensibility and programmability of C#. The language also incorporates big data processing concepts such as custom reducers and processors, as well as schema on reads.

Not surprisingly, U-SQL has its own peculiarities. Keywords such as

SELECT must be all uppercase, and the expression language within clauses such as SELECT and WHERE use C# syntax. For example, a WHERE clause equality operator would take two equal signs, and a string value would be enclosed in double quotes, as in WHERE Veggie == "tiger nut" .

In addition, the U-SQL type system is based on C# types, providing tight integration with the C# language. You can use any C# type in a U-SQL expression. However, you can use only a subset of C# types to define rowset columns or certain other schema objects. The usable types are referred to as built-in U-SQL types and can be classified as simple built-in types or complex built-in types . The simple built-in types include your basic numeric, string, and temporal types, along with a few others, and the complex ones include map and array types.

You can also use C# to extend your U-SQL expressions. For example, you can add inline C# expressions to your script, which can be handy if you have a small set of C# methods you want to use to process scalar values. In addition, you can write user-defined functions, aggregators, and operators in C# assemblies, load the assemblies into the U-SQL metadata catalog, and then reference the assemblies within your U-SQL scripts.

Data Lake Analytics executes a U-SQL job as a batch script, with data retrieved in a rowset format. If the source data are files, U-SQL schematizes the data on extract. However, the source data can also be U-SQL tables or tables in other data sources, such as Azure SQL Database, in which case it does not need to be schematized. In addition, you can define a U-SQL job to transform the data before storing it in a file or U-SQL table. The language also supports data definition statements such as

CREATE TABLE so you can define metadata artifacts.

Subscribe to:

Comments (Atom)